I just decided that Proxmox is not challenging enough and interesting enough to keep me trying stuff and investing learning time in it. Yeah, a lot of Proxmox lovers will hate me for saying that but the time for a new platform/way to do things is among us, and maybe you already notice, I don’t do VMware stuff anymore.

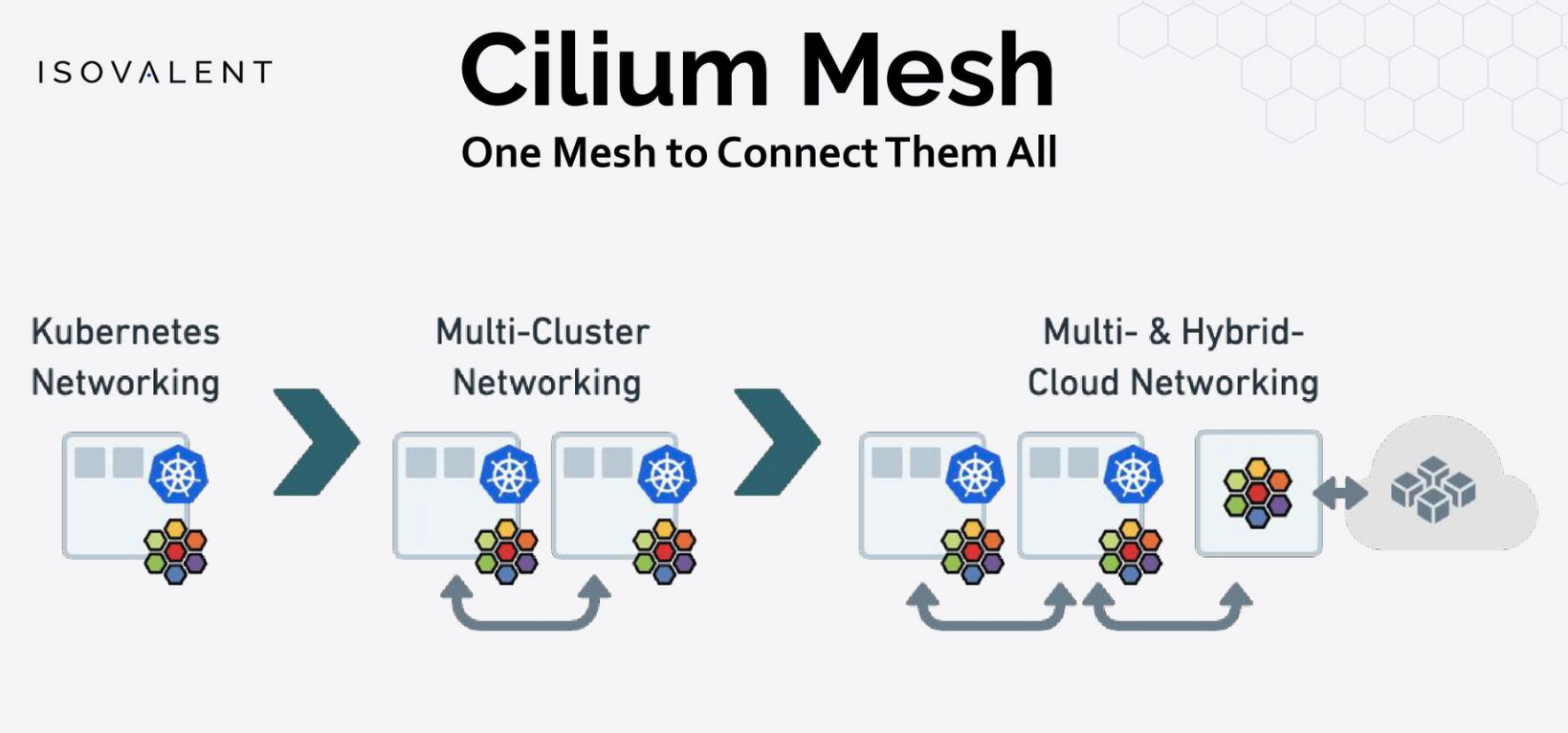

I just want to run Kubernetes, a lot of Kubernetes clusters for testing solutions like Cilium and others, my real issue with Proxmox is the integration with automation tools like Terraform (there is not an official module for it) and the Storage plugins for consuming Proxmox storage on Kubernetes is terrible. I always liked Harvester and the latest integration into the downstream cluster running on Harvester and deployed with Rancher is awesome!

So, this is the plan.

Currently running a 3-node Proxmox cluster with Ceph and a few NVME and Intel SSD drives that are good enough for decent storage performance. I will remove 2 nodes from that cluster and convert them to Harvester, now there is support for a 2-node Cluster + Witness node. The witness node will run on the stand-alone Proxmox (Harvester doesn’t have a USB pass-through yet and I run unRAID on that host).

The 2 HP z440 will be my new 2-node Harvester cluster.

This machine will have 4 drives, 2 NVME, and 2 Intel SSD, in the RAM area, there will be 256GB of RAM available and enough CPU to run at least 3 or 4 RKE2 clusters for testing and production stuff.

I’ll update on the progress…