For a few months, I’ve been reading about Ceph and how it works, I love distributed stuff, maybe the reason is that I can have multiple machines and the idea of clustering has always fascinated me. In Ceph, the more the better!

If you have multiple machines with lots of SSD/NVME the Ceph performance will be a lot different than having a 3-node cluster with only one OSD per node. This is my case, and the solution has been working well.

Installing Ceph on Proxmox is just a few clicks away, is already documented in https://pve.proxmox.com/wiki/Deploy_Hyper-Converged_Ceph_Cluster

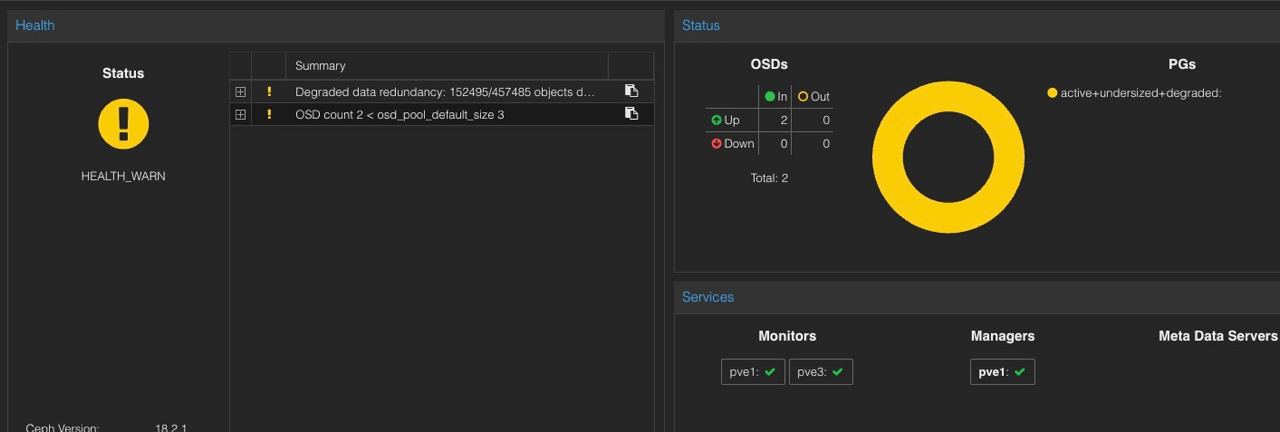

At first, I have two nodes and the state of Ceph was faulty.

The crush_map created by Proxmox is a 3-host configuration, that adds at least one OSD to the cluster, in this picture, there were only 2 hosts with 1 OSD each.

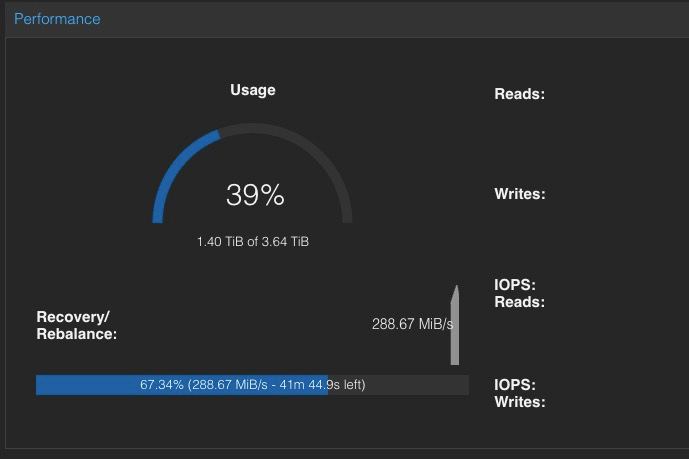

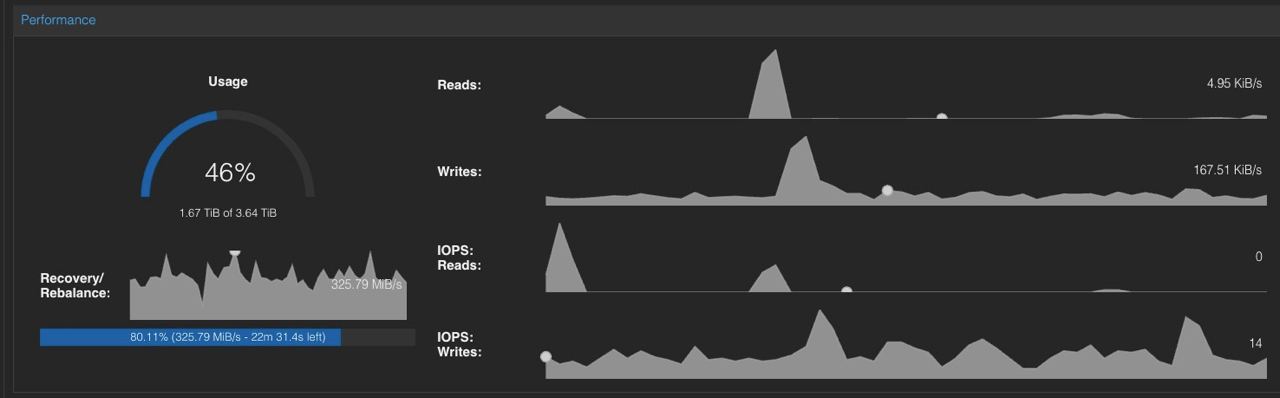

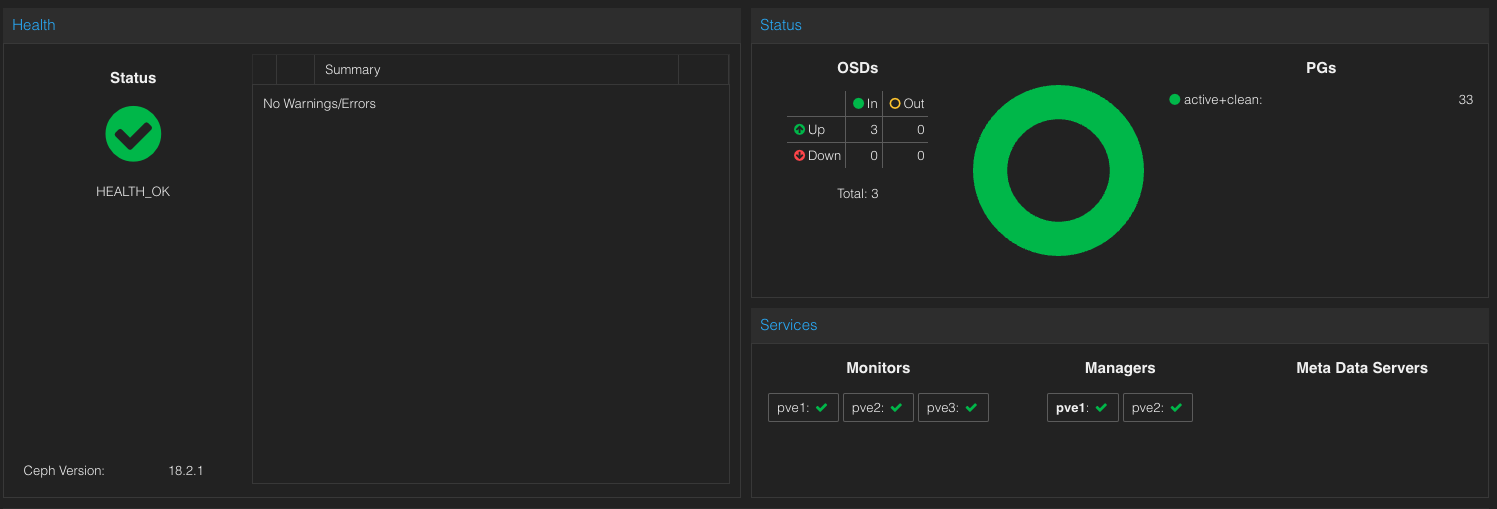

After the third node was added, it started replicating data across all OSD to meet the crush_map policy.

Here you can see the PGs getting moved across the OSDs.

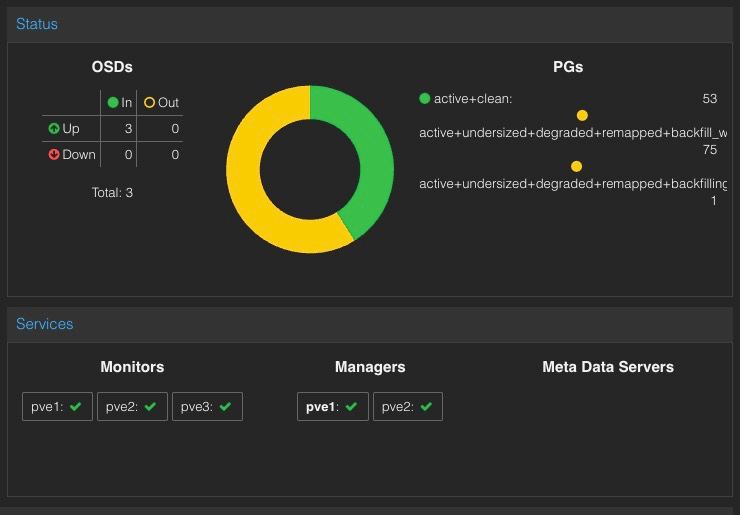

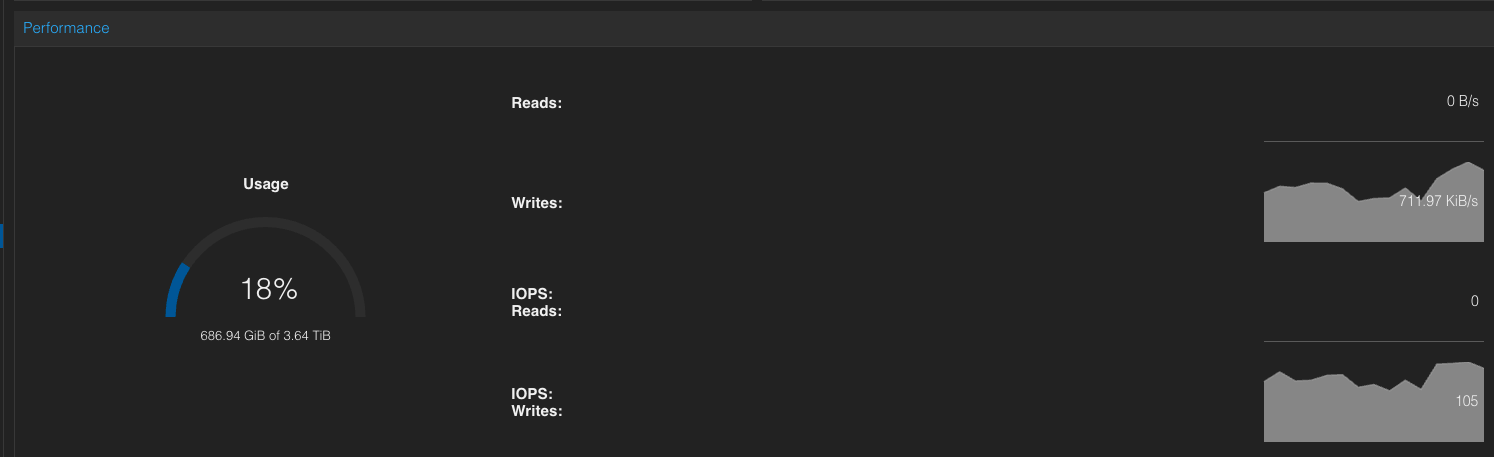

One thing I didn’t like about the storage usage on Proxmox, is the thin provision is nothing like VMware VMFS! It depends on the backend and the format of the virtual drive. I need to get used to this.

This is the state of the Storage side of the Proxmox Cluster. I need to move more VMs into this Storage and see how Ceph performs with more IOPs demanding VMs.

The hardware used in this cluster is documented:

here: https://arielantigua.com/weblog/2024/03/ceph-as-my-storage-provider/