Ceph is the future of storage; where traditional systems fail to deliver, Ceph is designed to excel. Leverage your data for better business decisions and achieve operational excellence through scalable, intelligent, reliable, and highly available storage software. Ceph supports object, block and file storage, all in one unified storage system.

That’s the official definition from Ceph website. It’s it true?

I don’t know. Want to find out!

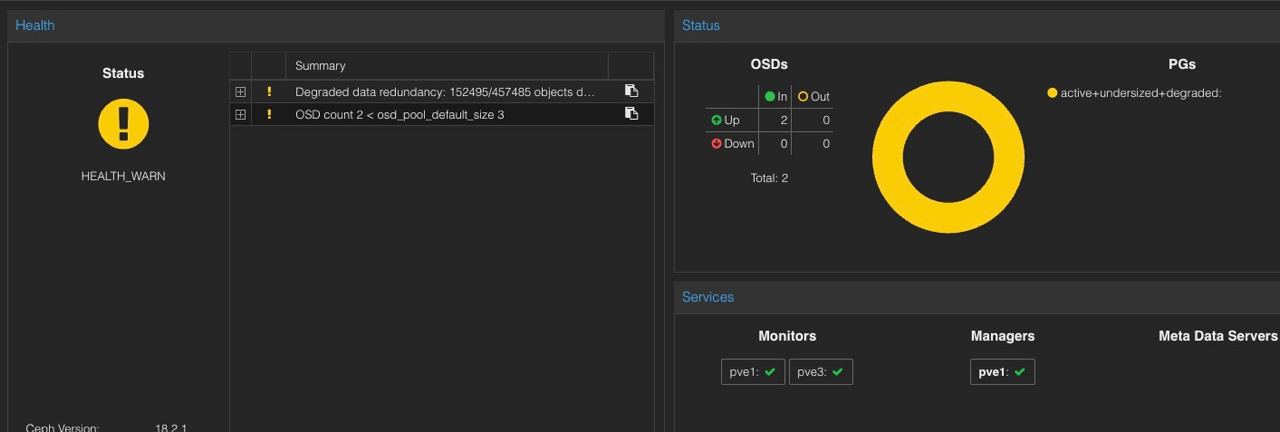

Since few weeks ago I’ve been in the planning stage to install and configure Ceph in a 3-node cluster, everything done via Proxmox UI. One of the main issues with this solution, the storage devices. how’s that?

Well.. it doesn’t like Consumer SSD/Disks/NVME.

BoM:

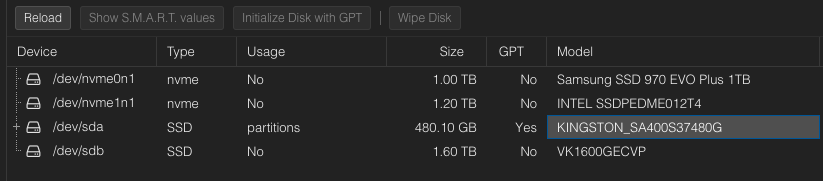

- Supermicro X9SRL with Xeon E5-2680v2 + 128GB of RAM + Intel P3600 1.6TB PCIe NVMe

- HP Z440 with Xeon E5-2640 v4 + 128GB of RAM + Intel Intel P3600 1.2TB PCIe NVMe

- HP Z440 with Xeon E5-2640 v4 + 64GB of RAM + Intel Intel P3600 1.2TB PCIe NVMe

Note: The storage listed here will be used for Ceph OSD, there is a dual 10GbE card on each host for replication.

I have a pair of 970 EVO Plus (1TB) that were working fine with vSAN ESA, decide to move to Intel Enterprise NVMe because a lot of information around the web points to bad performance with this type of NMVe.

The Supermicro machine is already running Proxmox, lets the Ceph Adventure begins!

This picture is one of the Z440, is full in there!!