Peplink as home gateway/firewall!

I’m a big fan of routers and firewalls, love the idea of running pfSense back in the days, before m0n0wall/pfSense, I used to run a custom FreeBSD firewall!!

Do you remember m0n0wall ??

Yes, the father of pfSense and some may say that m0n0wall is the father of opnSense!

Since a year ago, I decided in a branded router/firewall for the home, just because one feature. Yes, only one feature made me buy this Peplink Balance 20X.

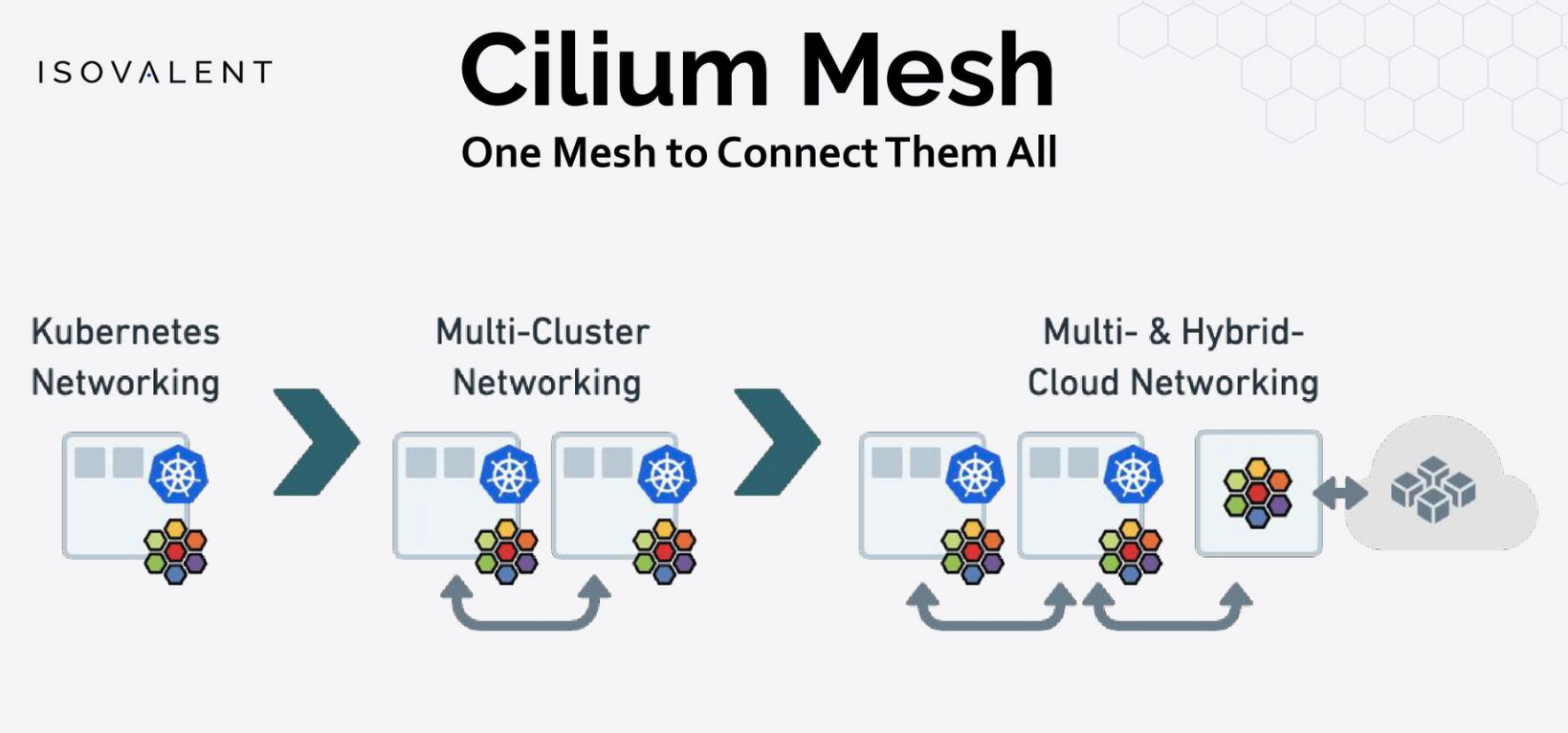

SpeedFusion

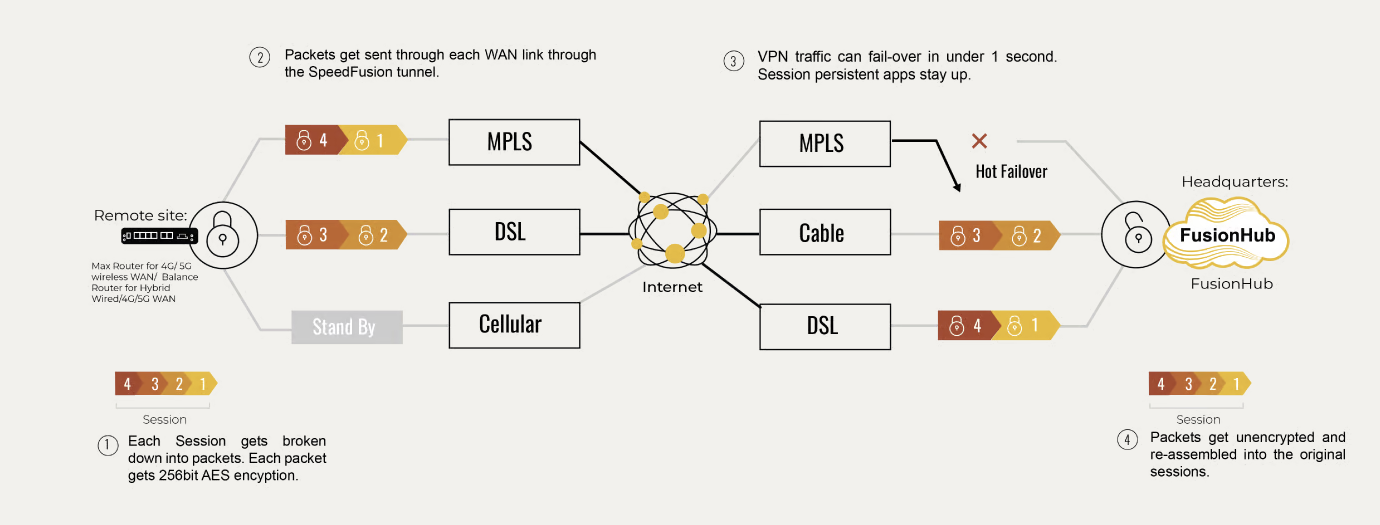

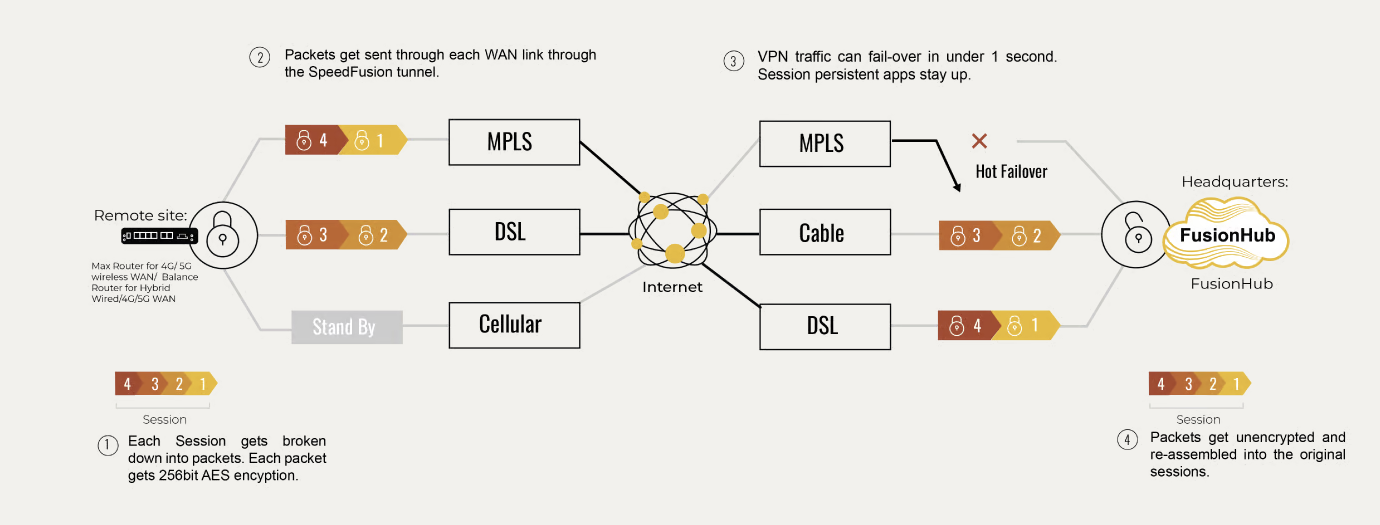

Peplink’s patented SpeedFusion technology powers enterprise VPNs that tap into the bandwidth of multiple low-cost cable, DSL, 3G/4G/LTE, and other links connected anywhere on your corporate or institutional WAN. Whether you’re transferring a few documents or driving real-time POS data, video feeds, and VoIP conversations, SpeedFusion pumps all your data down a single bonded data-pipe that’s budget-friendly, ultra-fast, and easily configurable to suit any networking environment.

This is the description that comes from the official website of Peplink [ https://www.peplink.com/technology/speedfusion-bonding-technology/ ]

There are free alternatives to SpeedFusion, but none of them works so seamlessly that sometimes I just forget about the SpeedFusion thing.

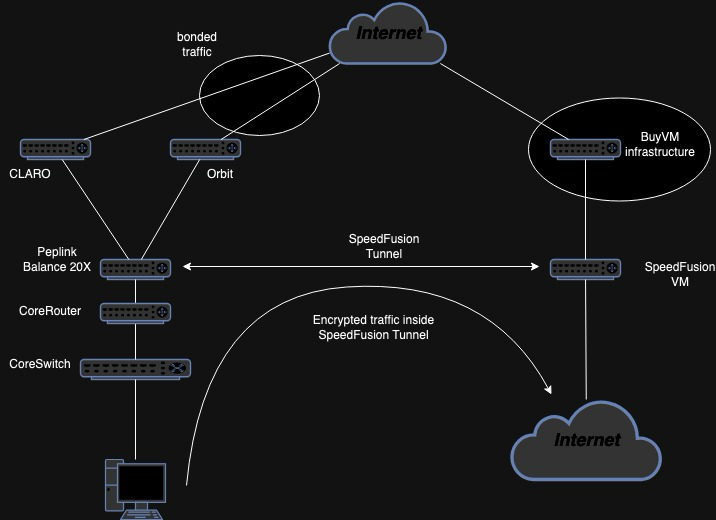

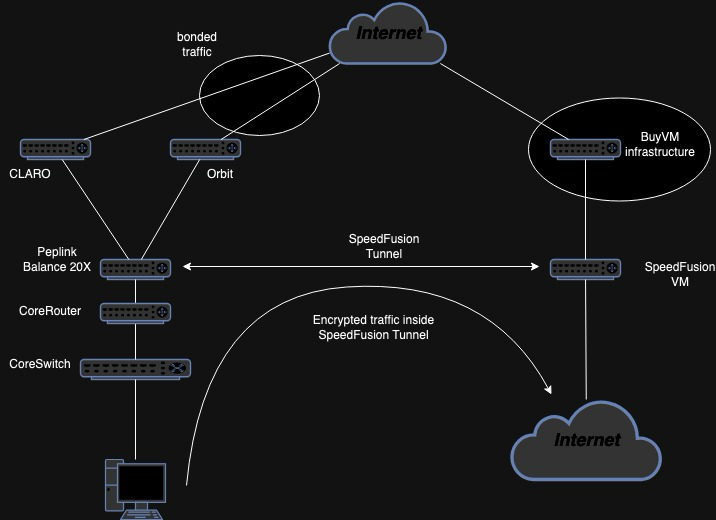

In my case, I have two Internet connections, one with CLARO (300/75) and a second one with OrbitCable (10/5). Why the second Internet connections? Well, is cheap and in case CLARO has issues, I can be online and read emails or even do a Teams Call.

A year ago I was running OpnSense with Dual Wan and was Ok, got my hand on an old Balance 20 (a previous model of the 20X and slower), play a little bit with SpeedFusion, at that moment I was convinced that this solutions is better for multiple internet connection with the bonding options, the failover is transparent because the IP of the VM hosting the other side of the SpeedFusion tunnel is the one being used for stablishing connections.

It looks like this (almost…):

My drawing skills are dead.

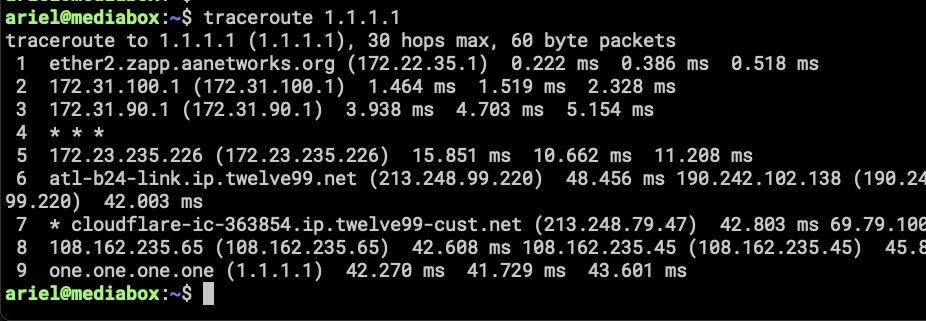

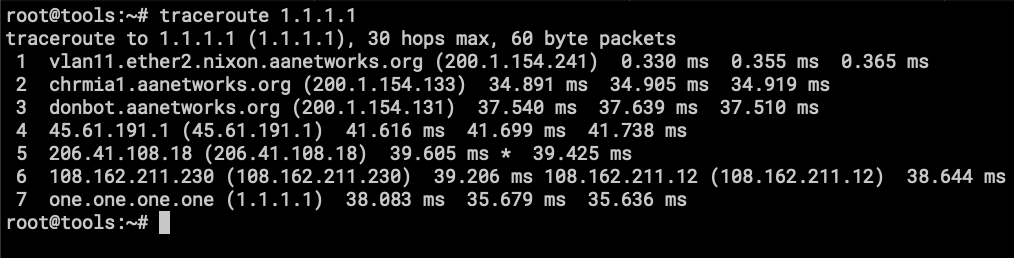

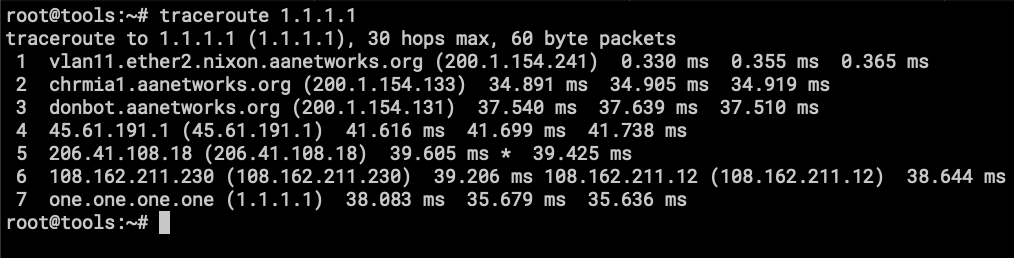

Traceroute from a machine with policy rules the sent traffic via SpeedFusion tunnel. A few machines are using this policy and going out to the internet using the bonded tunnel.

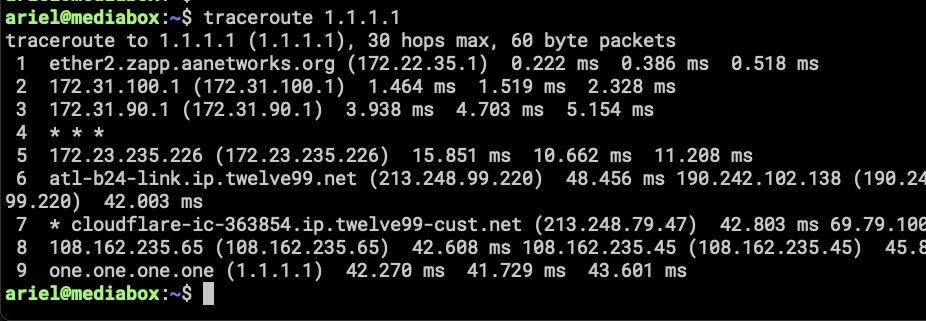

Traceroute from a machine without route policy rule, going out via CLARO. This is the normal behavior for the entire network, if CLARO goes down, the traffic moves over to Orbit.

What are the advantages of this?

- Using the SpeedFusion tunnel, the remote connections doesn’t see my real WAN IP, so in case one of the WAN goes down, the connection doesn’t reset.

- The classic Dual Wan/Load Balance is available, using policies you can manage how the WANs are being used inside the tunnel.

- You can publish internal services using the SpeedFusion VM WAN IP Address and only need to open ports on that VM hosted in the remote Data Center.

Any disadvantages?

- Yes, some sites detect my connections as bot/crawlers, and I need to complete captchas to get into some sites (Cloudflare, eBay and others).

- Slow, the bandwidth available inside the VPN is 100Mbps, this is a hardware limitation of the Balance 20X.

- Price, for this model, I need to pay for the 2nd WAN, it only has one Ethernet WAN and need to create a Virtual WAN which cost $49/y, the Balance 20 have two Ethernet WANs, I wasn’t aware of this until I got my hands on the 20X.

Special Use Case?

I’ve been running an ASN enable network for almost 6 years. big part of this network is connecting different Linux VMs with BGP via GRE/Wireguard tunnels to able to route to internet using a /24 of Public Routable IPv4 and a /40 of IPv6 Addresses.

There is a Mikrotik RB3011 connected directly to the Peplink, using this connection a GRE Tunnel is formed with another Mikrotik (CHR) running in the same virtual network as the SpeedFusion VM, the CHR is receiving a default route from a Debian VM with BGP Sessions to BuyVM routers, a lot of configurations in place. Before of this setting, there were two Wireguard Tunnels to different places to form the BGP Sessions, now I only need one, which is running on top of the two WANs.

Is cleaner, I think… this a topic for an upcoming post!